Swift, HTML

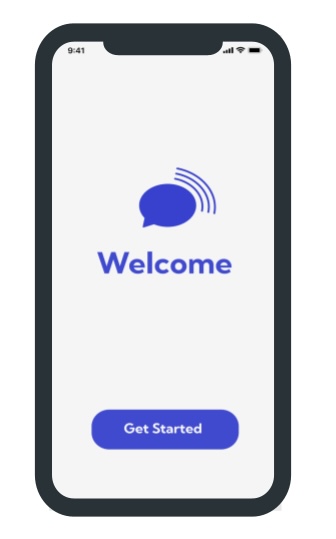

Speech Me.

An app for those who want to practice pronounciation of English words.

Swift, HTML

An app for those who want to practice pronounciation of English words.

Nearly 10% of all children experience speech-related disorders. But speech therapy can cost more than $31,000 each year. What if there was a simple, accessible, and affordable alternative to practicing speech/pronunciation?

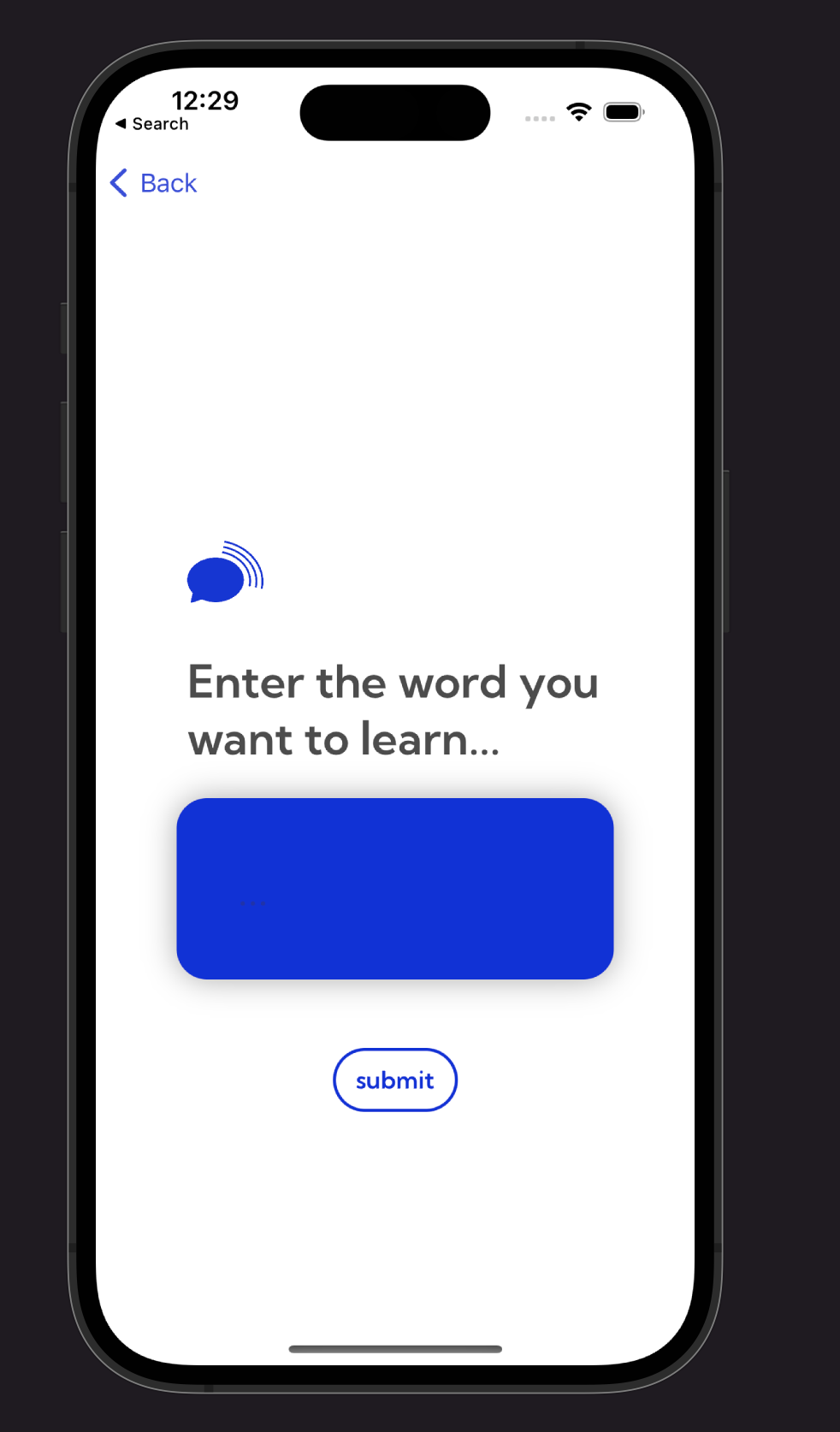

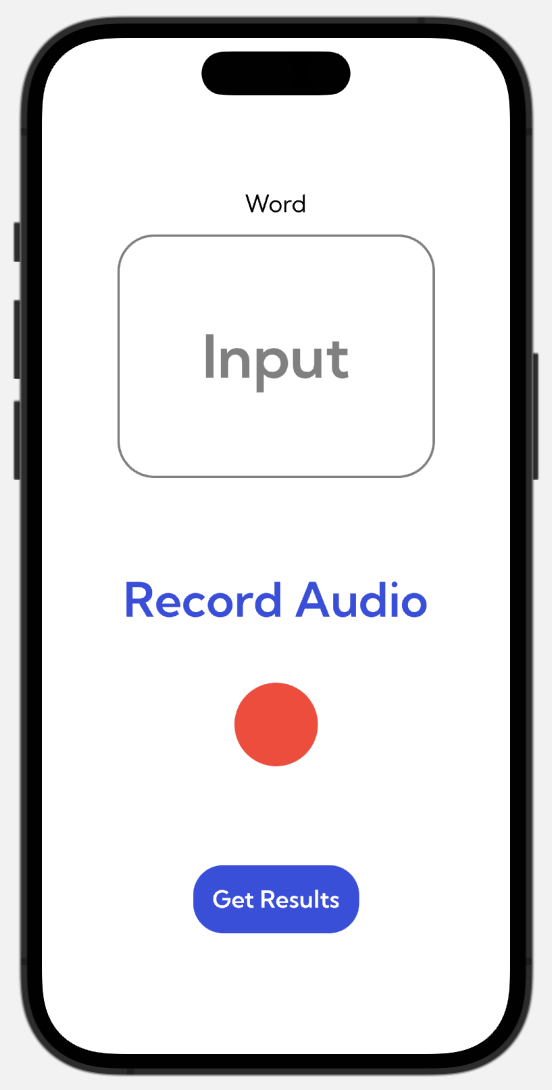

SpeechMe is a mobile iOS app developed for HackHarvard 2022 utilizing user AV audio recordings for English speech practice therapy. It facilitates AssemblyAI API integrations between AV audio and backend computing term similarity using the Jaro-Winkler algorithm. It generates a score based on pronunciation, prompting users to practice English words. The frontend includes 4 screens: Welcome, user word input screen, voice recording screen, and score screen. It allows users to navigate between screens depending on the number of times the user wants to practice a given word.

Our program architecture consists of three main components, Swift Frontend, Python Backend API, Python Backend Analyzer.

The usual flow of a use case is as follows:

The Swift frontend prompts the user for an input word that the user wants to practice, then allows them to record an audio file. The frontend takes these inputs and sends them to our backend API in a POST request as multi-part form data.

Our Flask API receives this data and sends the audio file to AssemblyAI’s audio-to-text API. Once it receives the text version of the input audio, our backend analyzer takes in the two strings (user input word and text-converted version of the input audio) and runs the Jaro-Winkler algorithm. This algorithm returns a similarity score between 0 and 1, which we scale into an integer from 0 ~ 100 to be then returned to the frontend.

Lastly, the frontend takes the return value from the POST request and displays it to the user as the score.